From The Vault: Diving into Big O, Big Theta, and Big Omega

Welcome to the first post in a new series I’m starting, “From The Vault”. In this series, I’ll be sharing some of the notes, thoughts, and insights I’ve recorded over the course of my studies in Software Engineering at Iowa State and beyond. These notes come from my personal vault (my Obsidian vault).

Today, we’re delving into the world of computational complexity, specifically focusing on Big O, Big Theta, and Big Omega. They are fundamental concepts in the study of algorithm efficiency and performance, and they were covered during my COM S 311 class at Iowa State University.

Note: While these notes are based on content directly from my vault, they have be parsed and re-organized prior to sharing to make more sense within a blog post.

Understanding Asymptotic Efficiency

Asymptotic Efficiency examines how the running time of an algorithm increases with the input size, particularly as the input size approaches infinity. It’s vital for evaluating algorithms beyond just raw execution times, which can vary between different hardware.

The Big Three of Computational Complexity

Big-O Notation ()

Big-O gives an upper bound on the asymptotic behavior of a function, essentially defining the maximum rate at which the function can grow.

Mathematical Definition: If positive constants and exist such that , then .

- It provides an asymptotic upper bound like

- When we discuss an algorithm having complexity, it means the algorithm takes at most linear time relative to the input size

Size of based on input type:

| Type | Input Size |

|---|---|

| integer | # of bits or digits |

| array | # of elements |

| string | length of string |

| graph | # of vertices or edges |

Big-Omega Notation ()

Big-Omega is about the lower bound, indicating the minimum growth rate of a function.

Mathematical Definition:

- It hints at the tightest lower bound of a function’s asymptotic behavior, like

Big-Theta Notation ()

Big-Theta provides a tight bound, showing that a function grows precisely at a certain rate.

Definition:

- It “sandwiches” the function between and , denoting functions with the same growth rate

Simplifying with Examples

Big-O: If , we simplify this to , disregarding constants and lower order terms.

Big-Omega: For , we can say it’s , suggesting it grows at least as fast as .

Big-Theta: If we have , it simplifies to , indicating it grows exactly as for large values of .

Examples from Class

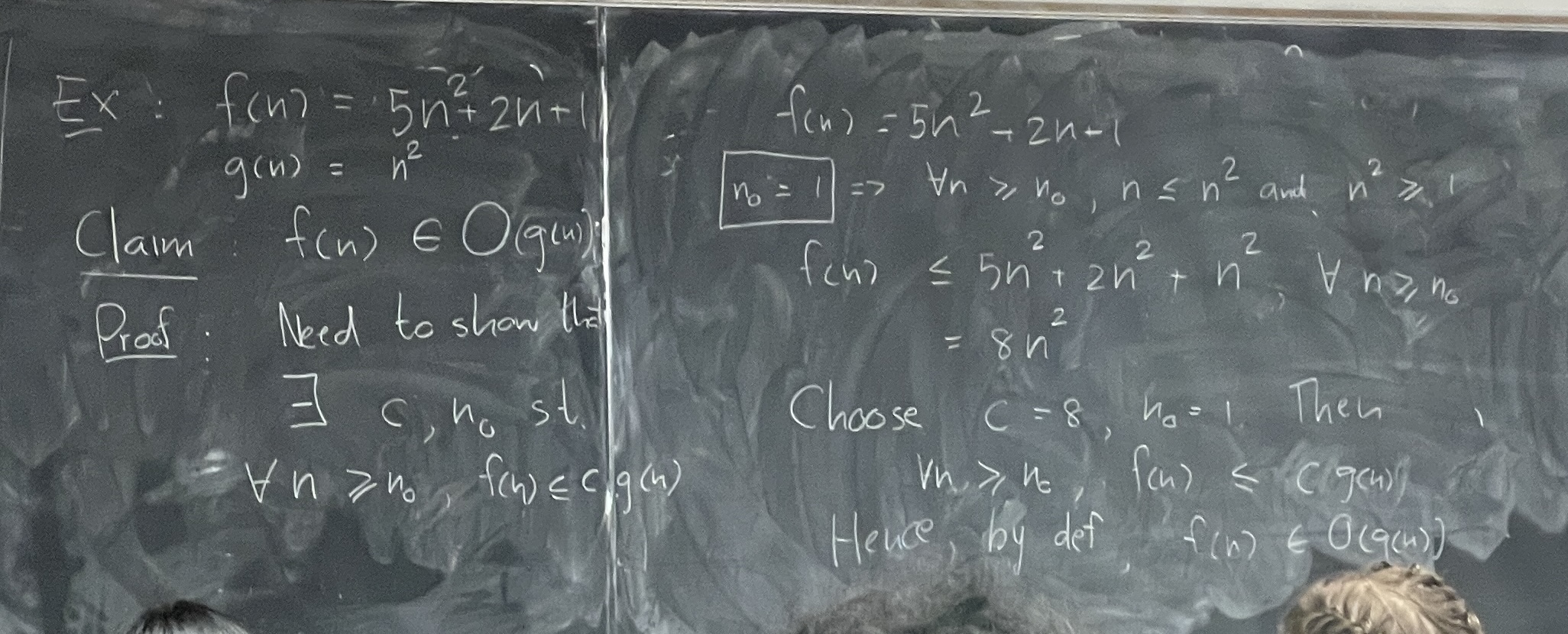

Example 1

and

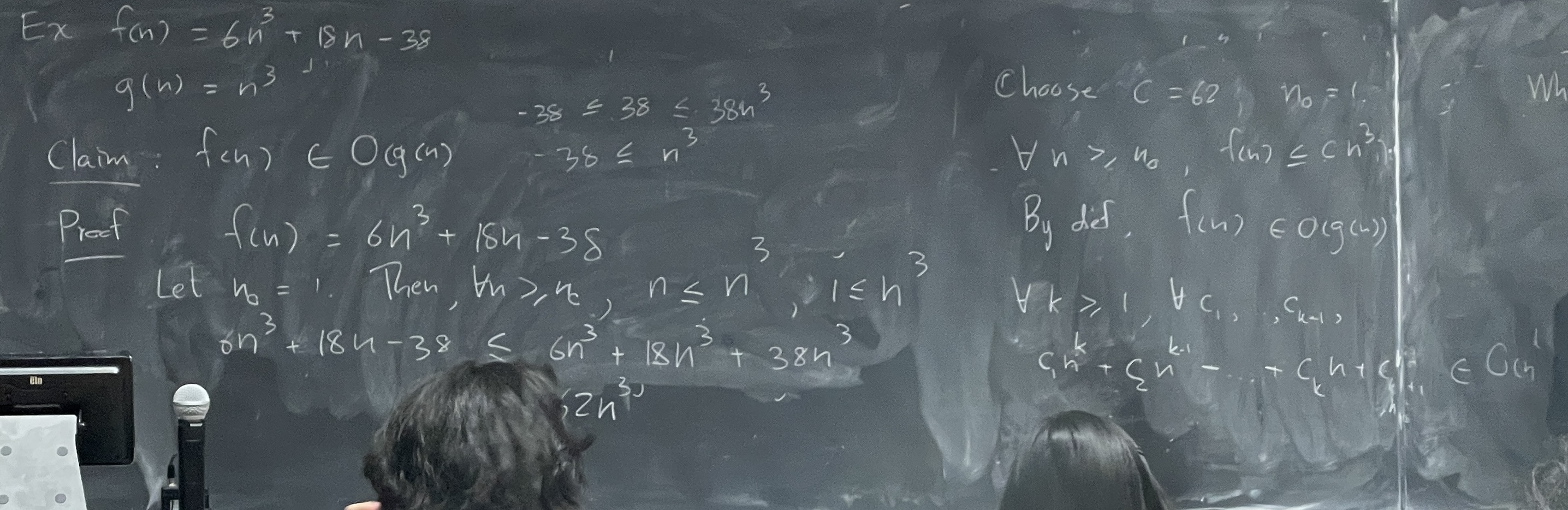

Example 2

and

Claim:

Example 3

- Intuition tells us that grows faster than

Claim:

Proof by contradiction:

- Assume

- By definition such that →

- But is a constant and an arbitrary cannot be smaller than

- This is a contradiction, so must be true

Example 4

and

Claim:

Mathematical Rule:

Proof:

- Choose ,

- By definition,

Example 5

and

Claim:

Proof:

- Tangent:

- Choose ,

- By definition,

Extra: Review of log

- If , then

Formal Derivations of Runtime

Expectation: Express runtime in Big-O notation

Example 1

for (i = 1; i <= n; i++) {

print(i)

}Goal: Derive runtime of this loop as a function of Analysis:

- For each iteration: how many primitive operations are done?

- There’s a comparison (

i <= n), print, and increment → all of these are constant runtime - Let be the number of primitive operations being done in one iteration

- During each iteration, primitive operations are done

| i | # Primitive Operations |

|---|---|

| 1 | c |

| 2 | c |

| 3 | c |

| … | … |

- This sums to or times

- Therefore, the total number of primitive operations is

- Thus, the runtime of this algorithm is

Example 2

for (i = 1; i <= n; i++) {

for (j = 1; j <= n; j++) {

// constant # of primitive operations

}

}Analysis:

- Start with innermost loop → Each iteration consists of a constant number of primitive operations, say operations, from previous example we know that the number of primitive operations is

- For a single iteration of the outer loop, the number of primitive operations is the inner loop sum + comparison operations + increment etc.

- Call the comparison and increment operations for the outer loop

- Total runtime is →

- With a polynomial runtime, we clear lower order terms and remove constants, therefore the runtime is

Example 3

for (i = 1; i <= n; i++) {

for (j=1; j <= i; j++) {

// constant # of primitive operations

}

}Analysis:

- Start with the innermost loop → Each iteration consists of a constant number of primitive operations, say operations

- However, in this case, the inner loop doesn’t run times every iteration of the outer loop

- Instead, it runs times. Therefore, for each iteration of the outer loop, the number of primitive operations the inner loop does is

- Now, we can sum this over all iterations of the outer loop to get the total number of primitive operations: , where is the constant runtime from the comparison and increment operations of the outer loop

- From this, we can rewrite the sum as: → since

- Simplifying, we find this to be

- In big-O notation, we ignore lower order terms and constants, therefore the runtime is

This highlights an important point: a loop running times nested within another loop running times does not always result in a runtime of . In this case the inner loop only runs times for the th iteration of the outer loop. The analysis still results in , but the actual number of operations is less than the previous example where both loops ran times.

I must admit, these notes, although relatively comprehensive, have room for improvement. So far, I’ve found the study of Big O, Big Theta, and Big Omega challenging when understood via proofs.

My plan is to revisit and add to these notes as my understanding of the subject continues to grow. This is a journey, and I believe in the power of constant learning and improvement. Sharing these notes publicly isn’t just about providing a resource to others; it’s also about motivating myself.

By putting my notes out there, I’m compelled to take better, more deliberate notes during class. It’s a commitment to myself to strive for clarity in understanding and precision in note-taking. I hope that my journey will inspire you in some way and lead to shared growth.